Its not 100% .NET Coherence, since a developer is required to modify configuration files on the java service to specify the generic POF Serializer.

So to start: The .NET Class:

[POFSerializableObject(StoreMetadata=true)]

public class Person

{

[POFSerializableMember(Order=0,WriteAsType=POFWriteAsTypeEnum.Int16)]

public int ID { get; set; }

[POFSerializableMember(Order=1)]

public string FirstName { get; set; }

[POFSerializableMember(Order = 2)]

public string LastName { get; set; }

[POFSerializableMember(Order = 3)]

public string Address { get; set; }

[POFSerializableMember(Order = 4)]

public string Title { get; set; }

public Person()

{

}

}

Note the StoreMetadata=true argument. When StoreMetadata is specified, Generic serializer will first first write a string array of property names. On server side, we must specify a Generic Java serializer. This is needed to be able to store objects for filtering. Here’s an interesting note. Unless filters are invoked, the object WILL NOT be deserialized on the java side. That means, Put, Get, GetAll calls without a filter, do not require Metadata to be written into the cache. Now. To specify Java Generic Serializer. Distributed Cache Configuration:

<distributed-scheme>

<scheme-name>dist-default</scheme-name>

<serializer>

<class-name>com.tangosol.io.pof.ConfigurablePofContext</class-name>

<init-params>

<init-param>

<param-type>string</param-type>

<param-value>custom-types-pof-config.xml</param-value>

</init-param>

</init-params>

</serializer>

<backing-map-scheme>

<local-scheme/>

</backing-map-scheme>

<autostart>true</autostart>

</distributed-scheme>

custom-types-pof-config.xml

<pof-config>

<user-type-list>

<!-- include all "standard" Coherence POF user types -->

<include>coherence-pof-config.xml</include>

<!-- include all application POF user types -->

<user-type>

<type-id>1001</type-id>

<class-name>com.Coherence.Contrib.POF.POFGenericObject</class-name>

<serializer>

<class-name>com.Coherence.Contrib.POF.POFGenericSerializer</class-name>

<init-params>

<init-param>

<param-type>int</param-type>

<param-value>{type-id}</param-value>

</init-param>

<init-param>

<param-type>boolean</param-type>

<param-name>LoadMetadata</param-name>

<param-value>true</param-value>

</init-param>

</init-params>

</serializer>

</user-type>

</user-type-list>

</pof-config>

For now, you must specify a user-type for each .Net object. On the java side, the server will be using the POFGeneicSerializer, and all values in the object array indexed by the property names. A generic getProperty method is implemented to allow filtering on any property that was used in the serialization. Property evaluation is happening on the server, so only filtered data is returned.

Here’s a simple loop to add an object into the cache

INamedCache cache = CacheFactory.GetCache("dist-Person");

for (int i = 0; i < 1000; i++)

{

cache.Add(i, new Person()

{

ID = i,

FirstName = string.Format("First Name {0}", i),

LastName = string.Format("LastName {0}", i),

Address = string.Format("Address {0}" , Guid.NewGuid()) ,

Title = i % 2 == 1 ? "Mr" : "Mrs"

});

}

A cool side effect, data can be accessed from .Net and from Java code in the same fashion.

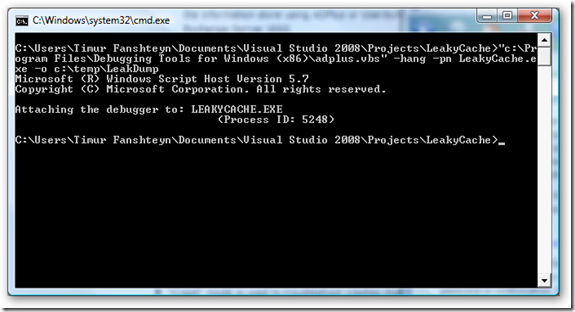

99.9% pure .NET Coherence (100 % No Java Code Required)

![clip_image002[12] clip_image002[12]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjkAF8BzLKq9slY7lhw9dSUGjj5wbx4ndBSDi9XgexEPvBon1PqhDcTnkLZzWtWEyUo4slVkoXLd0Hgq-T5wxsaKs61yozVerpY2XCAChyCkaHwkW5sHqOY9BXdCjwi7PPsj27J/?imgmax=800)